Human-in-the-Loop Object-Goal Navigation

A human-aware object-goal navigation framework that enables robots to reach target objects while adapting their motion to respect human personal space in dynamic household environments.

Introduction & Motivation

Household environments are dynamic, shared spaces where humans move unpredictably, perform tasks, and frequently interact with objects. While robots must navigate efficiently to reach target objects, naïvely optimized object-goal navigation can obstruct human activity, cause discomfort, or disrupt natural human–robot coexistence. Most existing navigation systems focus primarily on collision avoidance, overlooking key aspects of social acceptability such as predictability, legibility, and respect for personal and working spaces. By incorporating humans into the navigation loop and modeling their behaviors, robots can adapt their motion based on human task context, movement patterns, and proximity, enabling safer, more comfortable, and minimally disruptive object-goal navigation in everyday household environments.

Research Question

We aim to design a household robot capable of navigating toward object goals while dynamically adapting its behavior to human presence in shared environments. Specifically, the robot should minimize disturbance by avoiding overly intrusive actions, adjust its navigation strategy to prioritize humans and avoid interfering with ongoing human–object interactions, and achieve these behaviors without sacrificing efficiency or goal accuracy.

Proposed Method

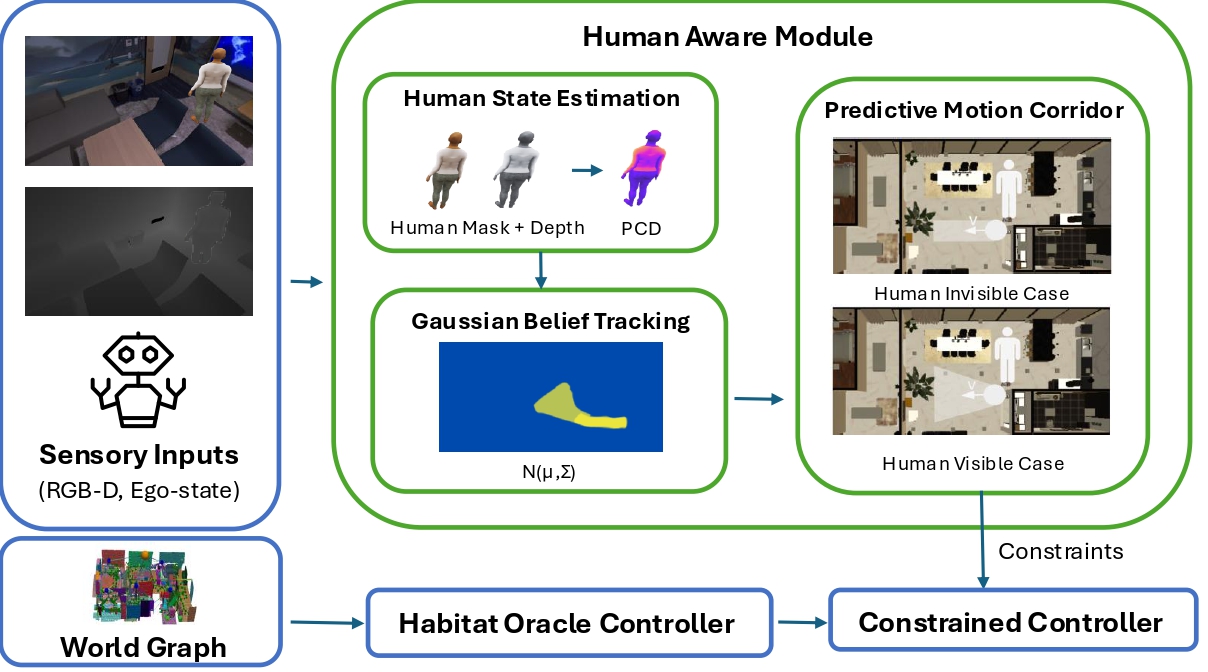

We propose a training-free, human-aware object-goal navigation module that augments a standard oracle navigation policy with explicit reasoning about human presence and motion uncertainty. The method decomposes the problem into human modeling under partial observability and robot planning with minimal intervention, enabling socially aware navigation without sacrificing task efficiency.

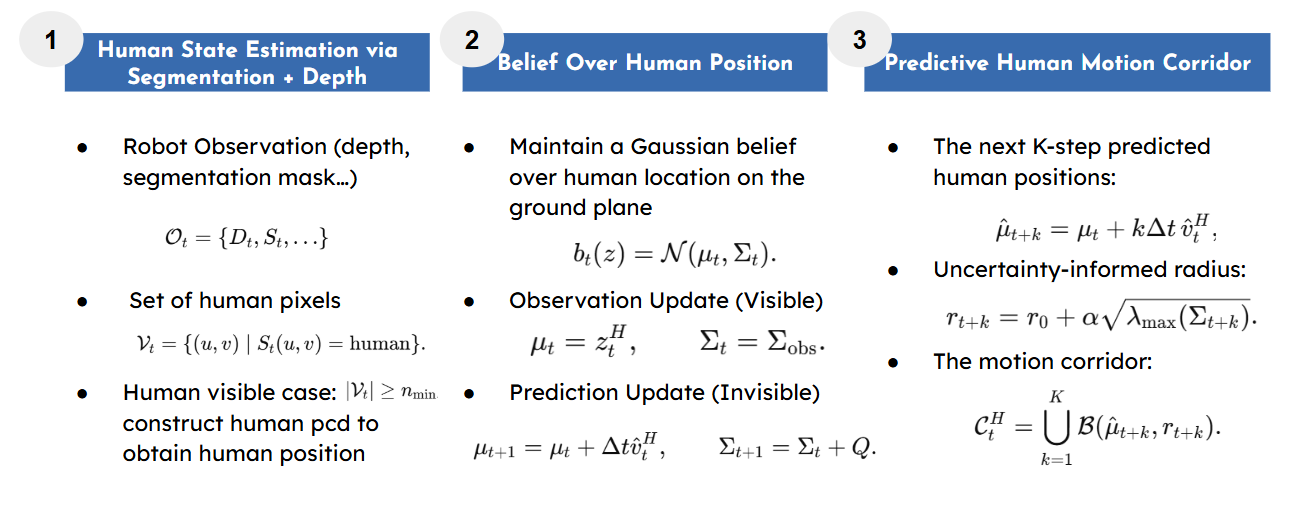

Human Modeling.

The human is treated as a dynamic, non-cooperative agent with unknown intent. Using segmentation and depth observations, we estimate the human’s ground-plane position when visible and maintain a Gaussian belief over their location when occluded. This belief is propagated forward using a constant-velocity model, capturing uncertainty due to sensing noise and intermittent visibility.

Predictive Motion Corridor.

From the belief distribution, we predict a short-horizon human motion corridor that represents the region the human is likely to occupy, with uncertainty-aware expansion. When belief uncertainty becomes too large, the corridor is discarded to avoid overly conservative behavior.

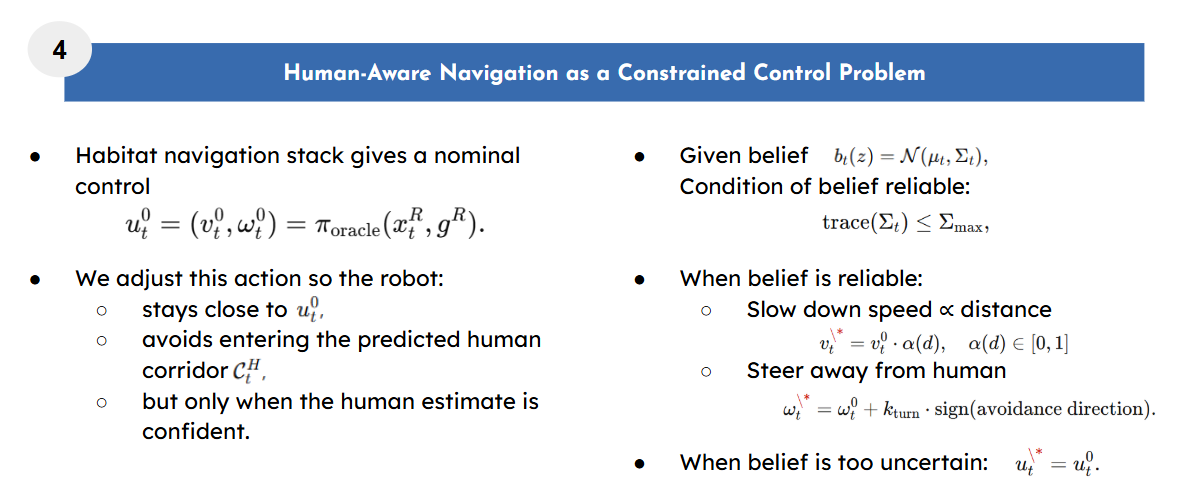

Human-Aware Robot Planning.

The robot follows the oracle navigation command by default and intervenes only when necessary. If the robot approaches the predicted human corridor within a safety margin, the control is smoothly modified by slowing down and steering away from the human. Otherwise, the original oracle action is preserved. This constrained control strategy ensures safety and social comfort while maintaining goal accuracy and efficiency.

Experiments

Setup

We evaluate our human-aware navigation framework in the Habitat 3.0 simulator using PARTNR benchmark assets. Experiments are conducted in a high-fidelity apartment environment containing realistic furniture and a simulated human performing household tasks.

To test generalization rather than map memorization, we construct 8 episodes within the same static scene while varying:

- Object configurations: target objects are randomly spawned in valid locations.

- Human activities: the human executes different rearrangement tasks, resulting in diverse trajectories.

- The robot and human pursue independent, non-collaborative objectives, coexisting in a shared space without explicit coordination.

Baselines

We compare three conditions:

-

Human-Only: human performs tasks without a robot (upper bound on efficiency).

-

Human + OracleNav: robot follows the default PARTNR object-goal navigation policy.

-

Human + Human-Aware (Ours): robot uses our belief-based human-aware navigation.

Evaluation Metrics

We evaluate the trade-off between task efficiency and social compliance.

- Social Compliance (Proxemics):

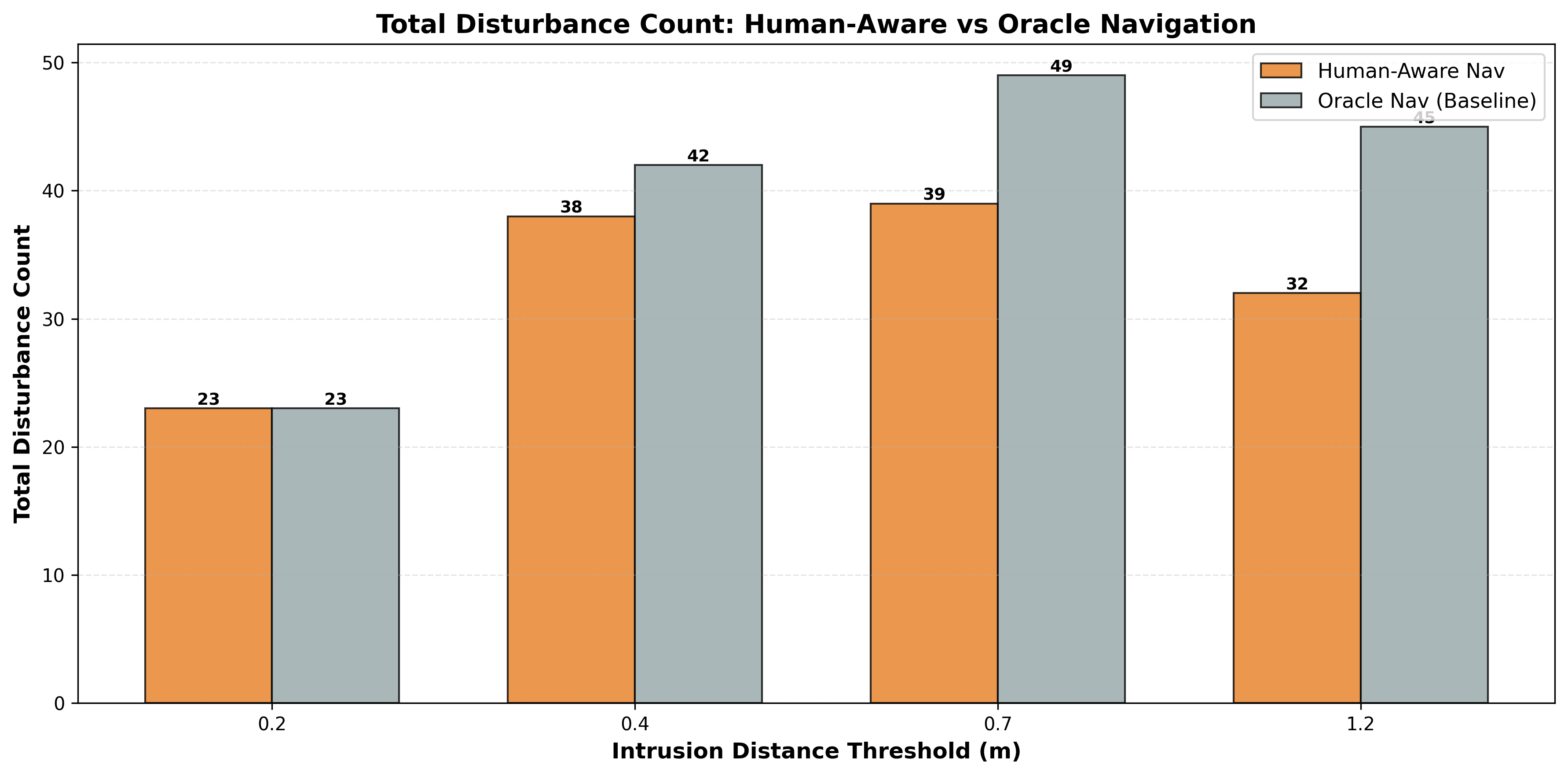

- Disturbance Count: number of robot intrusions within distance thresholds {0.2 m, 0.4 m, 0.7 m, 1.2 m}.

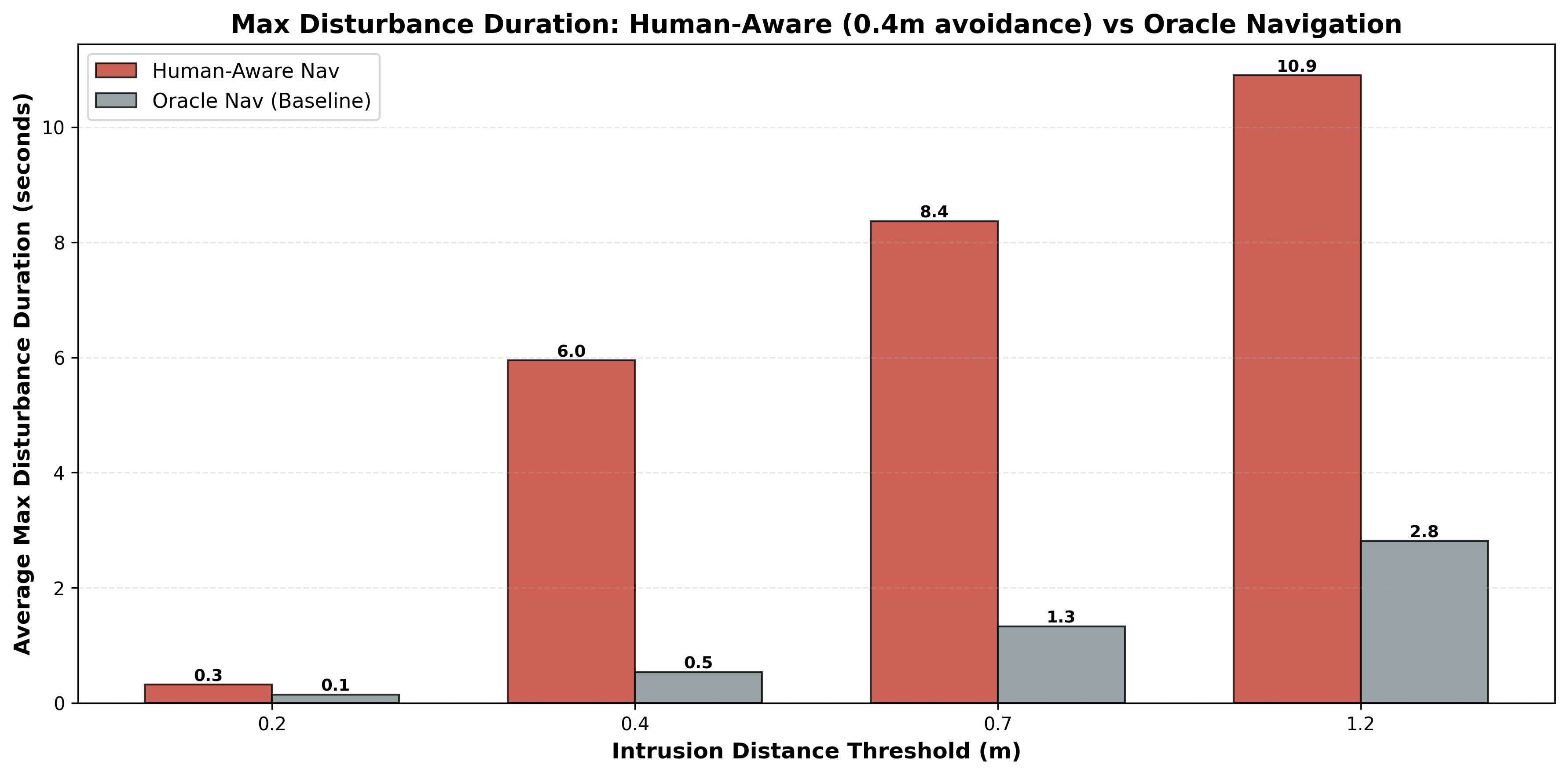

- Max Disturbance Duration: longest continuous time the robot remains within each threshold.

- Task Performance:

- Task completion rate.

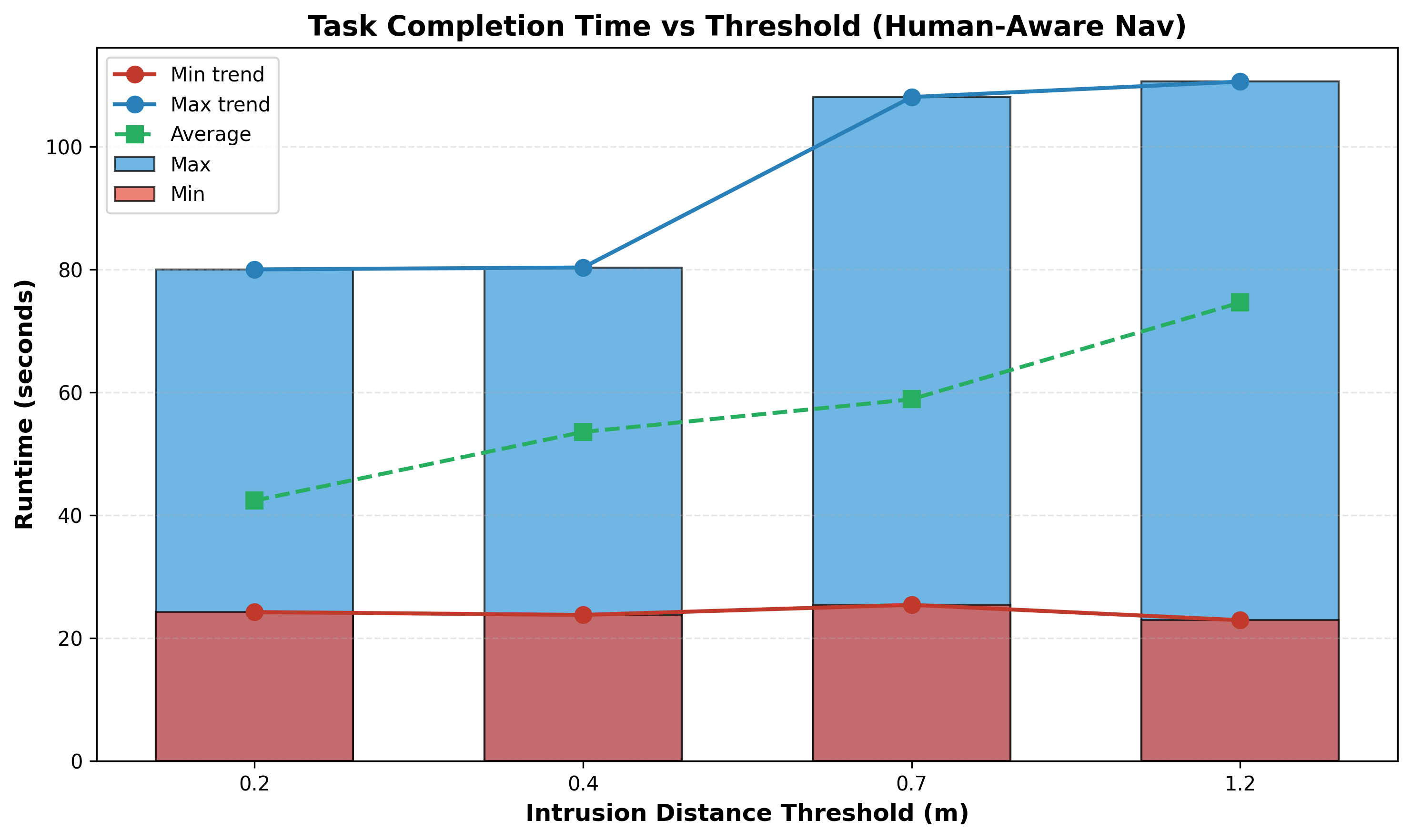

- Episode completion time (min, max, and average across episodes).

Results Summary

For more details please refer to the paper.

Our human-aware method consistently reduces social intrusions compared to the Oracle baseline, with up to 29% fewer disturbances in larger personal-space zones. While the robot may remain near the human for longer durations due to yielding behavior, these interactions are slow or stationary, improving legibility and perceived safety.

Task success rates remain unchanged across all conditions, indicating that social constraints do not block human progress. However, increased social awareness introduces a modest time cost: stricter safety thresholds lead to longer episode durations, highlighting a clear trade-off between spatial efficiency and social caution.